After learning about Zipf's law, I wanted to find those patterns myself. I am learning python3 now and I was looking at examples on how to read text files and the individual lines in them. I have found the complete works of Shakespeare on this page. http://shakespeare.mit.edu/

More information on the Zipf's law is available here:

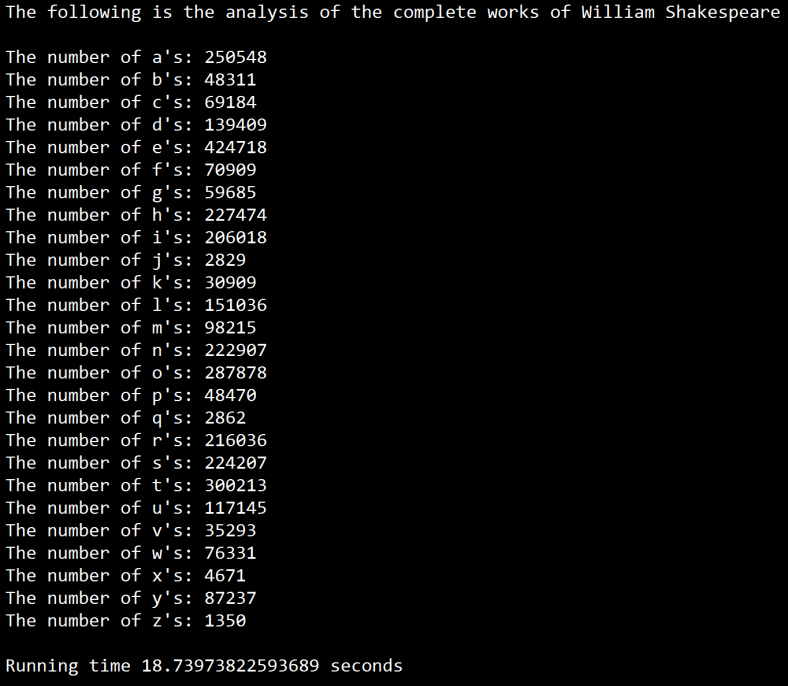

After getting text from the web pages into text files, these text files were imported into the python environment and analysis was performed on it. The analysis of the complete works has resulted in the following observations. Presented below is the output of the python console. I have used Anaconda 4.2.0 with Python 3.5 version. The goal is to count the number of occurrences of each of the English alphabet.

| Letter | Letter count |

| e | 424718 |

| t | 300213 |

| o | 287878 |

| a | 250548 |

| h | 227474 |

| s | 224207 |

| n | 222907 |

| r | 216036 |

| i | 206018 |

| l | 151036 |

| d | 139409 |

| u | 117145 |

| m | 98215 |

| y | 87237 |

| w | 76331 |

| f | 70909 |

| c | 69184 |

| g | 59685 |

| p | 48470 |

| b | 48311 |

| v | 35293 |

| k | 30909 |

| x | 4671 |

| q | 2862 |

| j | 2829 |

| z | 1350 |

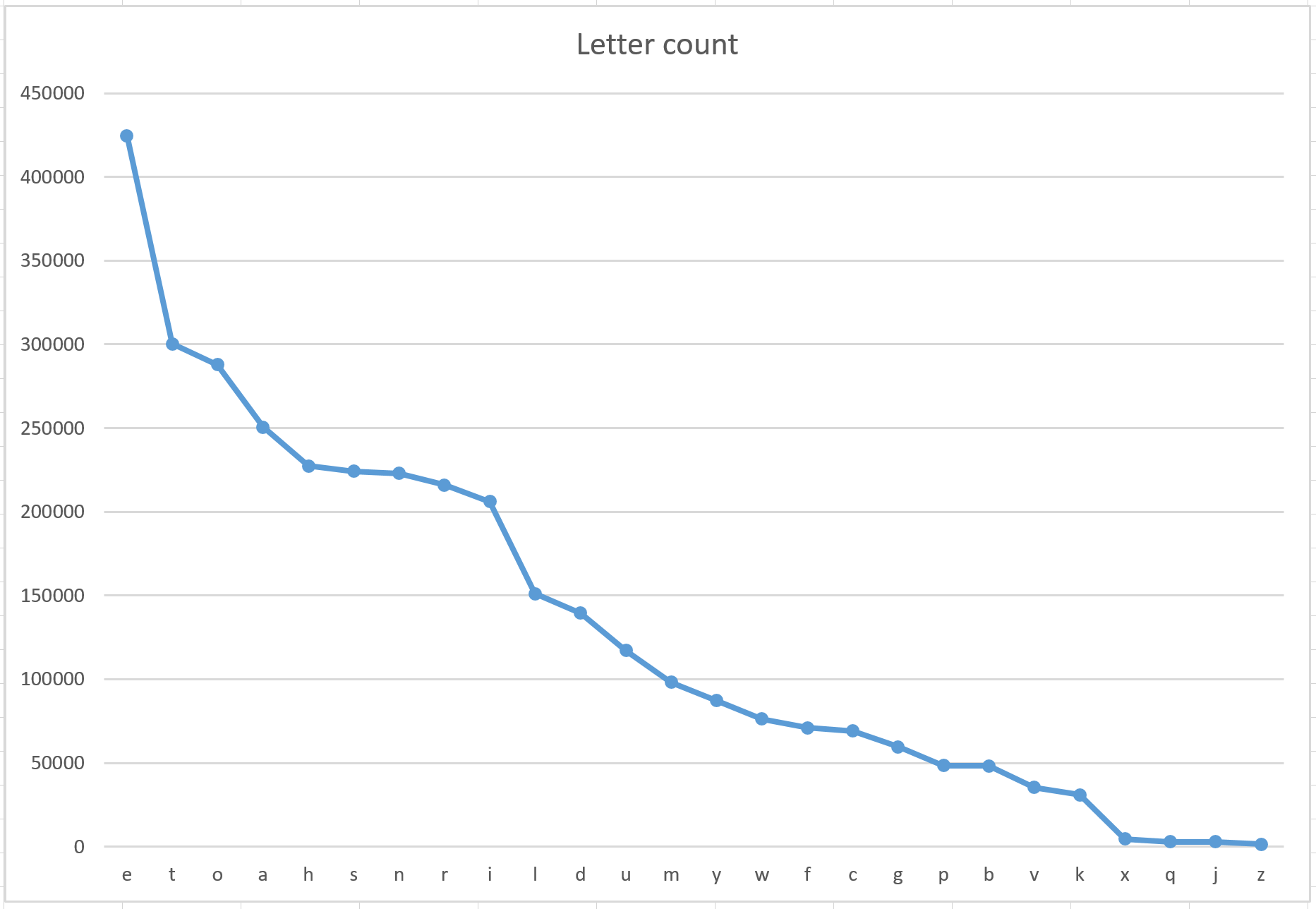

We can see that the count mimics an exponentially dropping distribution.

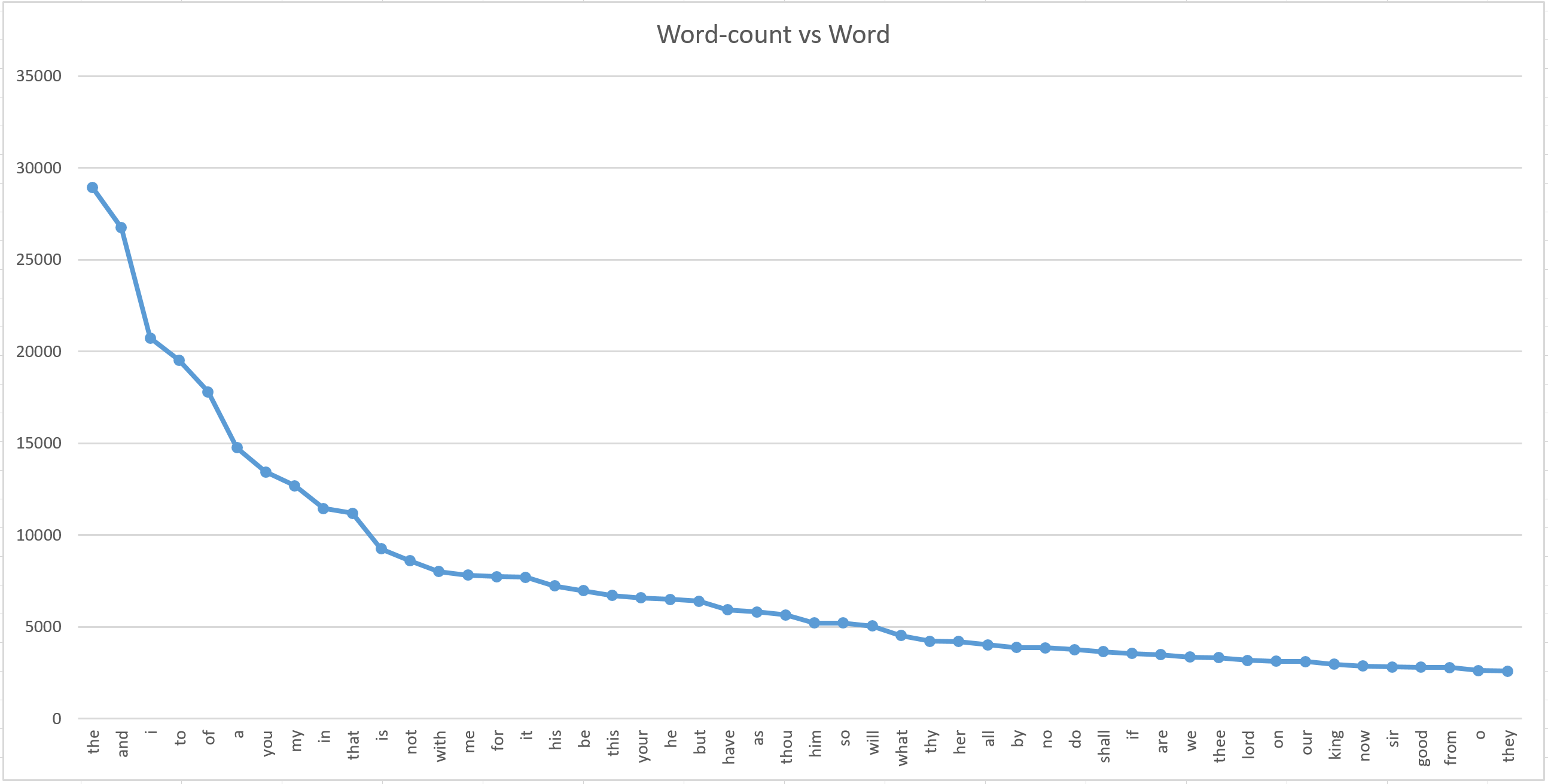

We can see that the top used words are 'the', 'and', 'i', etc. There are also some archaic words that were used often. Examples include - 'thee', 'thou', 'thy' and some others. Please note that there were more than 30,000 unique words in all his work. The above plot has only the top 50 used words.